Monte Carlo Simulation

If you hear a “prominent” economist using the word ‘equilibrium,’ or ‘normal distribution,’ do not argue with him; just ignore him, or try to put a rat down his shirt.

Nassim Nicholas Taleb

Monte Carlo simulation refers to a bewildering range of techniques to make estimations of some unknown parameters by repeatedly sampling the input data.

This method gets its name from Monte Carlo, a city famous for its casinos and gambling attractions. Just like the gambling and games of chance, this simulation relies on producing random samples of data and hence the name ‘Monte Carlo simulation’ looks kind of suitable. As Stanislaw Ulam puts it:

The first thoughts and attempts I made to practice [the Monte Carlo method] were suggested by a question which occurred to me in 1946 as I was convalescing from an illness and playing solitaires. The question was what are the chances that a Canfield solitaire laid out with 52 cards will come out successfully? After spending a lot of time trying to estimate them by pure combinatorial calculations, I wondered whether a more practical method than “abstract thinking” might not be to lay it out say one hundred times and simply observe and count the number of successful plays. This was already possible to envisage with the beginning of the new era of fast computers, and I immediately thought of problems of neutron diffusion and other questions of mathematical physics, and more generally how to change processes described by certain differential equations into an equivalent form interpretable as a succession of random operations. Later… [in 1946, I] described the idea to John von Neumann and we began to plan actual calculations.

According to the Law of Large Numbers, as the number of identically distributed and randomly generated variables increases, their sample mean approaches their theoretical mean.

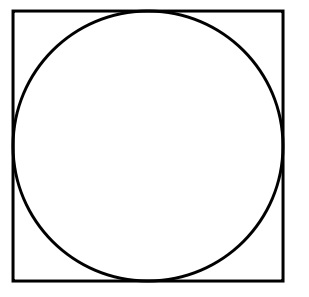

The most common example of Monte Carlo simulation is using it to estimate Pi (π). To do so, first imagine a circle with diameter 1 which is inscribed in a square of size 1.

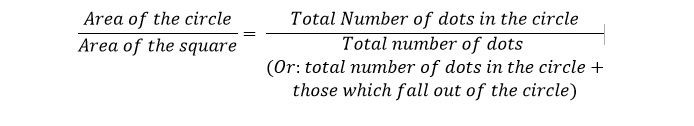

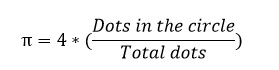

The area of the square is therefore equal to one, while the area of the circle is π/4. Hence:

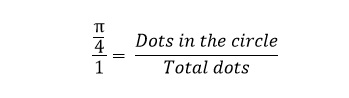

Then:

And, finally:

Now, we want to randomly generate several points (x, y) on the interval [0, 1] and check whether they will fall inside the circle or not. If you are doing this in any programming languages you have to set up an if-else statement checking for whether x2+y2≤1, for any combinations of x and y.

After doing all your iterations, you can calculate Pi by simply using the final equation from above. The results for different number of iterations using Scilab are as follows:

| N | 10 | 100 | 1000 | 10000 | 100000 |

| π | 2.4 | 3.8 | 3.092 | 3.1444 | 3.14308 |

As you can clearly see, as the number of iterations increase, and therefore more and more random samples of our input data are entered the model, we get closer and closer to the true value of π.

It is, in fact, the coupling of the subtleties of the human brain with rapid and reliable calculations, both arithmetical and logical, by the modern computer that has stimulated the development of experimental mathematics. This development will enable us to achieve Olympian heights.

Nicholas Metropolis

Also watch

John Guttag, computer science professor of MIT, explains Monte Carlo simulation very vividly:

Further reading

Stan Ulam, John Von Neumann, and the Monte Carlo Method

A comprehensive history of the Monte Carlo simulation by Nicholas Metropolis

Monte Carlo Methods: Early History and the Basics

An Overview of Monte Carlo Methods

Explained: Monte Carlo simulations

Hamiltonian Monte Carlo explained